Decoding the Mechanics: How Do LLMs Really Work?

The advent of Large Language Models (LLMs) such as OpenAI’s GPT-4, Google's BERT, and other sophisticated AI systems has transformed the way we interact with technology. These models are capable of generating human-like text, translating languages, summarizing articles, and even writing code. But how do these LLMs actually work? Let's get into the intricate mechanics behind these marvels of modern AI.

What is an LLM?

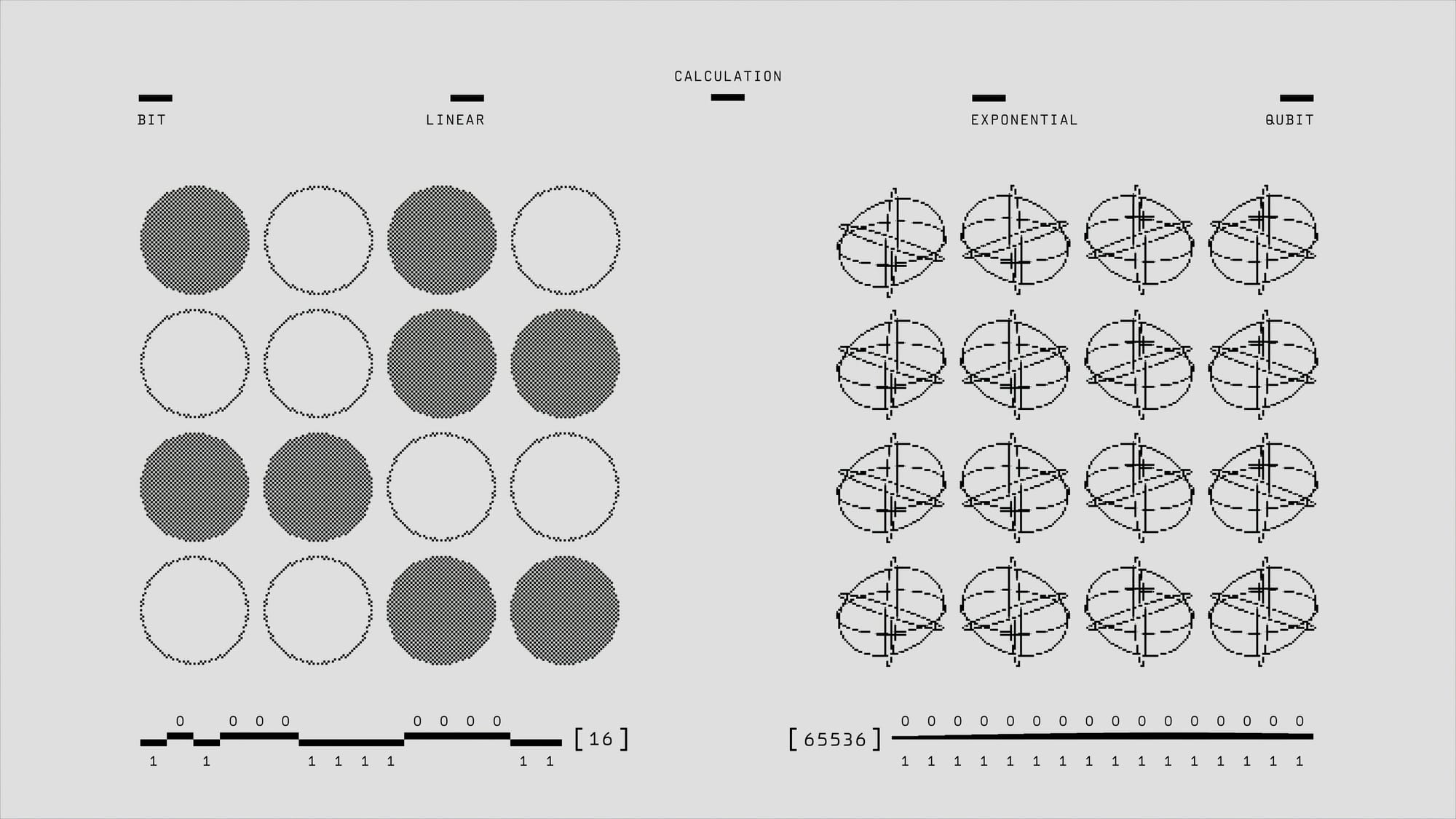

A Large Language Model is a form of artificial intelligence developed to comprehend and produce human language. These models are built using neural networks, which are computational architectures inspired by the human brain. The primary function of an LLM is to predict the next word in a sequence based on the context provided by the preceding words. This predictive capability is what allows LLMs to generate coherent and contextually relevant text.

The Architecture of LLMs: Neural Networks

LLMs are built upon a foundation of neural networks, inspired by the human brain. In an LLM, these neurons process words and their relationships.

Input Layer: This layer receives raw text data, which is tokenized into smaller units like words or subwords. The tokens are then converted into numerical representations to be processed by the neural network.

Hidden Layers: Multiple layers of neurons process the data, extracting features and patterns. Each layer applies transformations and activations, capturing linguistic structures, syntactic nuances, and semantic meanings, refining the representation at each stage.

Output Layer: This layer generates the final output, such as a translated sentence or a poem. It converts the processed data back into text tokens, assembling them into coherent and contextually appropriate sequences based on learned patterns.

The Training Process

The journey of an LLM begins with a massive training dataset. This data can include books, articles, code, and more. Through a process called "training," the LLM learns to predict the next word in a sequence based on the previous words.

Tokenization: Text is divided into smaller units known as tokens. These could be words, subwords, or characters. Tokenization helps in handling the text systematically and enables the model to process language at different levels of granularity. This step is crucial for dealing with variations in language, such as different tenses, plural forms, and compound words.

Embedding: Tokens are converted into numerical representations, allowing the model to process them mathematically. This is achieved through embedding layers, which map tokens to dense vectors in a high-dimensional space. Embeddings are learned during training and are crucial for the model's performance.

Training: The model is fed the input tokens and tries to predict the next token. The model's predictions are compared to the actual next token, and adjustments are made to the model's parameters to improve accuracy. This process, called backpropagation, involves calculating gradients and updating weights. It is repeated over many iterations, allowing the model to learn complex patterns and improve its language understanding.

The Transformer Architecture

The most advanced LLMs today are built using a neural network architecture known as the Transformer. Introduced by Vaswani et al. in 2017, the Transformer architecture has revolutionized the field of natural language processing (NLP).

Self-Attention Mechanism: A key innovation of the Transformer is the self-attention mechanism. This allows the model to weigh the importance of different words in a sentence relative to each other, regardless of their position.

Encoder-Decoder Structure: Transformers typically consist of an encoder-decoder structure, where the encoder processes the input text and the decoder generates the output text. In practice, many LLMs, like GPT-4, use only the decoder part of the Transformer for tasks like text generation.

Layers and Parameters: Transformers are composed of multiple layers, each containing numerous parameters. The number of layers and parameters significantly impacts the model's performance. GPT-4, for example, has hundreds of layers and billions of parameters, enabling it to generate highly sophisticated and contextually accurate text.

Handling Context: Attention and Memory

A critical aspect of LLMs is their ability to handle context. The self-attention mechanism allows the model to consider the entire context of a sentence or paragraph when generating text. This capability is essential for maintaining coherence and relevance, especially in longer pieces of text.

Positional Encoding: To give the model a sense of the order of words, positional encoding is used. This technique involves adding information about the position of each token in the sequence, enabling the model to distinguish between words based on their position.

Memory Mechanisms: Some advanced LLMs incorporate memory mechanisms to handle long-term dependencies in text. These mechanisms allow the model to retain information over longer stretches of text, improving its ability to generate coherent and contextually accurate responses.

Conclusion

Large Language Models represent a significant leap forward in artificial intelligence, with their ability to understand and generate human language opening up new possibilities in various fields. From chatbots and virtual assistants to automated content creation and beyond, LLMs are transforming the way we interact with technology. Understanding the mechanics behind these models not only highlights their current capabilities but also sets the stage for future advancements that will continue to shape our digital landscape.

At MEII AI, we harness the power of these advanced technologies to develop our AI applications. Our platform, powered by Retrieval-Augmented Generation (RAG), offers features like Chat, Discover, and Insights, enabling enterprises to build powerful, scalable applications that search, understand, and converse through text. With industry-specific Large Language Models and embedding models, we ensure precise semantic search results and contextually accurate conversations.